ABSTRACT

This paper examines how social media platforms are becoming increasingly used by hate groups to establish online networks and communities that support and promote racism. Communicative theories will be explored and how their effects are driving individuals on to social media platforms to promote and spread hate speech. The hate speech being distributed has found to be using a variety of social media platforms both from moderated and unmoderated platforms which together are creating a complex system of hate speech that is extremely difficult for platforms to crack down on.

HATE GROUPS AND THEIR NEFARIOUS WAYS

Hate groups are finding opportunity in social media platforms to establish growing networks of hate. The social media platform helps to create opportunity for individuals to engage in limitless social interaction and community building on a global scale regardless of social or political agenda. By facilitating the ability to connect and participate in these social media platforms users can create a sense of community and belonging with other like minded users often resulting in encouraging and rewarding experiences for the users (Mike Kent, 2022). These opportunities do however also extend to users demonstrating malicious and antisocial behaviour and they are granted to a degree a level of opportunity to connect, and community build as well.

While hate speech and racially malicious discourse has been around since the conception of the internet it has since exploded with the developments of social media (Siapera & Viejo-Otero, 2021). These developments mean that for these individuals and groups that wish to publish malicious content, they have now new opportunities to utilise the social media platforms for mass mobilisation and distribution of their disruptive and hateful material (Delanty, 2018). The worldwide web now at their fingertips through the access and opportunity provided by the functionality of social network platforms it is no wonder that racially malicious content is exploding on social media platforms. This shift towards a more digitally focused approach is seeing a war take place on social media platforms for racial and social freedoms.

A report by The Online Hate Prevention Institute (OHPI) was submitted to the Australian government in 2020 detailed key areas of concern regarding racial discrimination in Australia. One primary concern the OHPI identified during the 2020 period was a noticeable surge in racial discrimination both in public and online settings targeted towards Asian demographics (Online Vilification and Coronavirus, 2020). The vilification and online hate directed towards Asian groups during 2020 was perpetrated by hate groups to use the COVID-19 virus origins to further establish and spread racially discriminative rhetoric on social media platforms. As the explosion of hate speech occurs on social media platforms, there have been reductions in group hate speech being perpetrated in in-person events which is attributed to the development Australian legislation and improvements in public opinion.

THE SPIRAL OF SILENCE

This shift towards digitally driven hate content may be explained in part by the effects of the Spiral of Silence theory. The Spiral of Silence is a communication theory that contends that individuals can be motivated by the fear of isolation, causing individuals to assess themselves and their opinions first to see if they align with the majority’s public opinion (Stoycheff, 2016). This can result in individuals having a reduced willingness to speak out and often leading the minority to be silenced out of fear of the perceived threat of social isolation (Matthes, 2015). A reduced willingness to voice malicious behaviour and speech which has helped to silence hate groups in Australia.

Australia still does face a very real problem when it comes to racism and social injustice with a reported one-third of all Australians having experienced racism in the daily lives from individual interaction (Amnesty International Australia, 2021). Hate groups however have seen a reduction in size and it is primarily because of Australia progressively becoming one of the most multiculturally diverse countries in the world with a consistent positive attitude towards immigration (Rajadurai, 2018). From the early 1970’s Australia has adopted many changes in favour of racial equity and freedom, with legislation like the Racial Discrimination Act 1975 (RDA) and the Australian Human Rights Commission Act 1986 which have helped to pave the way for racial equality in the country. Australia has progressed to a point where the majority of the population are supportive of freedom from discrimination (84%) and believe that the country become a successful multicultural society (64%) (Amnesty International Australia, 2021). It is due to this trend in acceptance of a multicultural society that the Spiral of Silence theory’s effect can be observed.

With Australia having successfully taken steps to reduce overall activities that promote group public displays of hate and oppression however these antisocial and malicious behaviours still live on through private gatherings and individual interaction. While legislation and fear of social isolation may deter hate groups from performing public acts of group hate in Australia it has failed to stamp out hate speech in private settings and in the digital environment. An example of such can be seen in groups like the National Socialist Network (Australia’s largest Neo Nazi group) where they do not openly protest however they tend to operate primarily through private social gatherings and online communications via a variety of moderated and unmoderated social media platforms including Telegram which has a reputation for harbouring hate groups (Tozer, 2021).

PLATFORMED RACISM

Platformed racism as Dr. Matamoros- Fernández has termed is the mediation and circulation of race-based controversy via online media platforms (Matamoros-Fernández, 2017). It is a fitting term with how social media platforms are currently being managed and operated by both users and the corporations. Most corporations that run these platforms do aim to promote equality and a safe space for individuals through moderation of content, algorithms designed to identify and remove malicious content, as well as platform standards and guidelines. While moderation and the implementation of algorithms may be perceived by users to be adequate when combating platformed racism it has however been proven to be unreliable and inadequate in providing the desired outcome of reduction or elimination of platformed racism and online hate. It was reported in 2021 that internal Facebook documents found that executives working at Facebook knew that their algorithms were racially biased towards people of colour and enabled hate speech (Taylor, 2021).

Access, opportunity, and the mismanagement of moderation created by social network platforms has allowed for this form of platformed racism to grow. Online hate speech and racial discrimination is represented in both moderated and unmoderated platforms and has allowed for platformed racism to build communities on social media platforms despite the platforms efforts to quash it.

THE HATE MULTIVERSE

There is an abundance of choice when it comes to using social media platforms and while objectively, they all serve very similar purposes, not all platforms share the same level of standards and moderation when it comes to the management and elimination of malicious hate content.

With the varying levels of moderation on the platforms that can potentially restrict and limit the anti-social and hateful influences, these hate groups have adapted in ways to ensure maximum reach. To circumvent platform moderation these groups are establishing networks scattered across various platforms to ensure that their message and community continues to grow in spite of the barriers and restrictions enforced by moderated platforms.

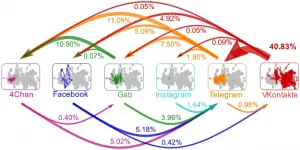

Figure 1. Hate Multiverse Connectivity Chart

The term Hate Multiverse was coined in a recent report by Nature Science publications where they investigated the influence and spread of online hate groups and their content through social media platforms (hate clusters as they refer to them). The report found that the hate groups were actively broadcasting and linking to unmoderated platforms. By circumventing the legislation and company standards that moderated platforms abide by, the hate clusters appear to have seemingly limitless reach by groups utilising a multi-platform approach (Velásquez et al., 2021).

Platforms investigated in the report included popular and moderated social media platforms like Facebook and Instagram where social equality and freedom of discrimination is part of the core principles the corporations aim for. Whereas there were other commonly frequented platforms that had little to no moderation like Telegram and 4Chan which were actively being directed to via moderated platforms. With platforms like Telegram and 4Chan having a growing reputation of being home to racially divisive and discriminatory discourse amongst their peers it is no wonder that hate groups feel comfortable in directing users to these havens (Zannettou et al., 2020). Identified as a central hub for hate groups, the statistics indicate that Telegram appears to be a major gathering points for hate groups to connect in, allowing for the hate cluster to then broadcast invitations to other hate clusters redirecting users and continuously developing hate networks (Quinn, 2021).

The report observed three main conclusions that were found while examining the hate multiverse.

- It is very hard to eradicate hate groups as they tend to resurface after being dismantled

The study has found that while hate groups and the malicious activity they generate may appear to be eliminated from a platform the reality is that these groups have likely already formed links between the moderated and unmoderated platforms. It is possible for moderation teams to identify and eliminate a hate group on a moderated hate cluster, but they will be completely unaware of the group’s developments and connections outside of the platform. Hate groups will be actively using moderated platforms to recruit and direct users to outside of the platform. Once outside of the platform these groups can then redirect back to the moderated platform.

- Hate groups are using their influence to direct users outside of platforms

The hate groups are able to facilitate individuals’ movement from moderated platforms to less moderated platforms through the distribution of links (Velásquez et al., 2021). By providing individuals with links to unmoderated platforms they are able to control the growth of hate groups and create opportunity for individuals to potentially fall victim to the influence of the views and beliefs of the groups.

- Social network platforms are gateways for content to pass through

The interconnected nature of social network platforms means content available on one platform can very easily be accessed by users globally on various platforms (Velásquez et al., 2021). Once content has begun to circulate among the hate clusters it is very difficult for platforms to eradicate the content.

CONCLUSION

Social media platforms provide excellent opportunities for people of all backgrounds to connect and network on a global scale. However while social media technologies can be used as a platform for positive growth conversely the platforms can breed negative sentiment and othering through the various postings and hate groups (Carlson & Frazer, 2020). While corporations and countries have made it more difficult for the hate groups to exist on platforms it seems an impossible task to eliminate the hate multiverse. Hate groups have quickly learned to restructure and adapt to digital environments that do not support their malicious behaviour and beliefs. Through the utilisation of both moderated and unmoderated platforms these groups are going to continue to circumvent policies and standards designed to quash their antisocial behaviour and ideologies, consequently allowing them to establish enduring networks and communities of hate (Velásquez et al., 2021).

Australia has shown great progress over the last few decades in establishing legislation and programs to combat racial discrimination in Australia. It has allowed for the country to stride towards building a truly multicultural and accepting society however more attention does need to be placed on the digital space where racially discriminative rhetoric is much more active than in-person group activity in the physical space. Platformed racism is the new frontier of hate speech in Australia as we continue to let it manifest.

PDF Version of the article : Download here

List of figures

Figure 1. From Hate Multiverse Connectivity Chart, by Nature Publisher Group

https://www.nature.com/articles/s41598-021-89467-y

References

Amnesty International Australia. (2021, October 6). Does Australia have a racism problem? Amnesty International Australia. https://www.amnesty.org.au/does-australia-have-a-racism-problem-in-2021/

Carlson, B., & Frazer, R. (2020). “They Got Filters”: Indigenous Social Media, the Settler Gaze, and a Politics of Hope. Social Media + Society, 6(2), 205630512092526. https://doi.org/10.1177/2056305120925261

Delanty, G. (2018). Virtual Community: Belonging as communication. In Community (3rd ed., pp. 200–224). Routledge. https://doi.org/10.4324/9781315158259-10

Kent, M. (2022). Social Media, Communities and Networks. [Study Package]. Blackboard. https://lms.curtin.edu.au/

Matamoros-Fernández, A. (2017). Platformed racism: The mediation and circulation of an Australian race-based controversy on Twitter, Facebook and YouTube. Information, Communication & Society, 20(6), 930–946. https://doi.org/10.1080/1369118X.2017.1293130

Matthes, J. (2015). Observing the ‘Spiral’ in the Spiral of Silence. International Journal of Public Opinion Research, 27(2), 155. http://dx.doi.org/10.1093/ijpor/edu032

Online Vilification and Coronavirus. (n.d.). Online. Retrieved from https://parliament.vic.gov.au/images/stories/committees/lsic-LA/Inquiry_into_Anti-Vilification_Protections_/Submissions/Supplementary_submissions/038_2020.06.17_-_Online_Hate_Prevention_Institute_Redacted.pdf

Quinn, B. (2021, October 15). Telegram is warned app ‘nurtures subculture deifying terrorists’ | Apps | The Guardian. https://www.theguardian.com/uk-news/2021/oct/14/telegram-warned-of-nurturing-subculture-deifying-terrorists

Rajadurai, E. (2018, December). Why Australia is the world’s most successful multicultural society. The McKell Institute. https://mckellinstitute.org.au/research/articles/why-australia-is-the-worlds-most-successful-multicultural-society/

Siapera, E., & Viejo-Otero, P. (2021). Governing Hate: Facebook and Digital Racism. Television & New Media, 22(2), 112–130. https://doi.org/10.1177/1527476420982232

Stoycheff, E. (2016). Under Surveillance. Journalism and Mass Communication Quarterly, 93(2), 296–311. http://dx.doi.org/10.1177/1077699016630255

Taylor, T. (2021, November 21). Facebook knew its algorithms were biased against people of color. Mail Online. https://www.dailymail.co.uk/news/article-10227659/Facebook-knew-algorithms-biased-against-people-color.html

Tozer, N. M., Joel. (2021, August 16). Inside Racism HQ: How home-grown neo-Nazis are plotting a white revolution. The Age. https://www.theage.com.au/national/inside-racism-hq-how-home-grown-neo-nazis-are-plotting-a-white-revolution-20210812-p58i3x.html

Velásquez, N., Leahy, R., Johnson, R. N., Lupu, Y., Sear, R., Gabriel, N., Jha, O. K., Goldberg, B., & Johnson, N. F. (2021). Online hate network spreads malicious COVID-19 content outside the control of individual social media platforms. Scientific Reports (Nature Publisher Group), 11(1). http://dx.doi.org/10.1038/s41598-021-89467-y

Zannettou, S., Baumgartner, J., Finkelstein, J., & Goldenberg, A. (n.d.). WEAPONIZED INFORMATION OUTBREAK: A Case Study on COVID-19, Bioweapon Myths, and the Asian Conspiracy Meme. Network Contagion Research Institute. Retrieved from https://networkcontagion.us/reports/weaponized-information-outbreak-a-case-study-on-covid-19-bioweapon-myths-and-the-asian-conspiracy-meme/

Hi David,

Thank you for a paper that examines a very important issue in social media communication. I notice that you mention Telegram and 4Chan as two un-moderated platforms that facilitate hate speech. The report you summarise is very interesting but seems limited to an examination of Facebook and Instagram. I wondered if you found other platforms that facilitated hate speech during the course of your research for this paper? There is very little mention of Twitter and I’m particularly interested to know how this platform contributes to or challenges hate speech online given its very large user base. I’d love to know what you think.

Deepti

Hi Deepti,

Thanks for taking the time to read and comment on the article. Just to clarify I mentioned that Telegram and 4Chan had “little to no moderation” and expanding on this while they may have a level of moderation, in a lot of cases there are ways for individuals to skirt the rules and promote the hate speech. As an example of one way in which the hate speech is allowed to propagate is that the 4Chan rules section clearly states racism is prohibited on the site but racism isn’t moderated in one of their discussion boards where it is quite prevalent (4Chan, n.d.). A fantastic and deep dive into the data and statistics behind the hate multiverse can be found via the article I cited ‘Online hate network spreads malicious COVID-19 content outside the control of individual social media platforms’ there Telegram and 4Chan are mentioned predominantly throughout.

To answer your question I think we are rather spoiled for choice when it comes to social media platforms to use in this digital age so while there were other platforms that I found in my research into this topic (Twitter, Gab and VKontakte), I did decide to narrow my focus onto the 4 primary platforms listed in my article. As for Twitter it falls into a similar basket as Facebook whereas hateful content is moderated and restricted on the platform however hate groups are circumventing these restrictions by establishing networks outside of the moderation and then distributing short but very active cycles of hate on the moderated platforms (ie. Twitter) before they get removed again (Velásquez et al., 2021).

Regarding Twitter too it will be interesting to see how it evolves over the coming year as I believe Elon Musk’s bid to buy out the company is going ahead.

Daniel

4Chan. (n.d.) Rules. https://www.4channel.org/rules

Velásquez, N., Leahy, R., Johnson, R. N., Lupu, Y., Sear, R., Gabriel, N., Jha, O. K., Goldberg, B., & Johnson, N. F. (2021). Online hate network spreads malicious COVID-19 content outside the control of individual social media platforms. Scientific Reports (Nature Publisher Group), 11(1). http://dx.doi.org/10.1038/s41598-021-89467-y

Hi Daniel, I found your paper very fascinating as I agree that hate groups are creating a voice for themselves online especially through social media, which gives them a platform to distribute information, connect with others and build a community. I find the Spiral of Silence theory to be interesting and allows people to think about their opinions before publicly stating them. The fear of social isolation definitely impacts people’s need to say negative comments and helps in silencing hate groups in Australia. Although Australia is a very multicultural society, how do you think hate groups find a sense of belonging in such communities?

I do agree that there is an abundance of choice for social media platforms and that they have different standards and moderation. Although a page or group can be shut down, it is very hard to eradicate hate groups as they have serval forms of communication. What do you think social media platforms can do better to shut down such communities?

Hi Andrea,

I’m glad you found the paper an interesting read. The Spiral of Silence theory was something new to me as well so it was interesting to read about how the fear of social isolation can influence how minorities can act and speak in public settings. I think with the potential for these hate groups to utilise the anonymity afforded to them by these platforms really does eliminate that fear of social isolation. As to your question I believe like other communities on these platforms they can fall victim to a degree of confirmation bias so once users are in these communities they’re being surrounded by individuals of similar opinions which helps them to develop that sense of belonging. If we can intercept that link and stop the spread of hate content we might be able to prevent these communities from forming to begin with.

I think it’s very difficult for these platforms to single-handedly take on the fight and it is only going to be won when corporations can work together with governments to develop strategies to crack down on hate speech. Interestingly it was only a few hours ago that an article on Business Insider was posted where they wrote “EU warns Elon Musk that being too lax on Twitter moderation could get the platform banned in Europe” (Hamilton, 2022). Ideally corporations should be willingly cooperating with governments to crack down on hate speech however if corporations do object to moderation I think there are ways for governments to hit these platforms that support hate speech where it hurts which is their wallets.

Daniel

Hamilton, I. A. (2022, April 27). EU warns Elon Musk that being too lax on Twitter moderation could get the platform banned in Europe. Business Insider. https://www.businessinsider.com/elon-musk-twitter-moderation-eu-dsa-thierry-breton-2022-4

Hi Daniel,

I agree that other communities on online platforms can certainly fall victim to a degree of confirmation bias. Intercepting this link is very important to prevent the spread of hate online but it’s easier said than done as there is very limited control in preventing this type of content. Strategies definitely need to be developed to prevent and monitor hate speech, governments and corporations working together would be a great start. You raise a very interesting point in your last sentence, can you elaborate on this more?

Thanks for the response, David, and that’s an insightful observation, Andrea.

Part of the problem with monitoring these platforms is the very breadth of content that needs moderating on a daily basis.

The spiral of silence theory has been widely circulated in academic debates on hate speech and political speech. That said, some academics also point to the waning of filter bubbles and echo chambers–see, for example, Axel Bruns’s book Are Filter Bubbles Real (2019)–that form the basis of this spiral of silence.

It would be interesting to know whether these spirals of silence are context or platform-specific, i.e. are certain forms of hate speech, particular topics, or platforms likely to be met with more “silence” or less opposition than others?

I also wonder if there are other papers on filter bubbles, etc.— 🙂

Deepti

Hey Daniel, your paper is relevant to social issues happening around the world. It’s great to see that Australia does have laws in place however, social media and online content are still a grey area of laws even in Australia. Just like online sexual assault can sometimes be hard for governments to press charges as online assaults are not clearly defined. This is where tech companies are having to police and make their own judgments. While hate groups are filtered from websites like Facebook and Instagram, platforms like Telegram & Whatsapp allow these groups to form as they don’t even have access to these online communities. I do have a question do you think groups like Neo-nazi and the KKK and many other hate groups have a right to be on these platforms and do you believe it’s tech companies or governments that need to control and regulate online content.

Hi Daniel, your paper was very captivating. Nowadays, with easy access to the internet and its platforms, several groups online give people the opportunity to voice out.

I agree with you that people are more conscious of what they are posting due to hate comments that can easily be spread. With many online groups targeting public figures, we can see how it is easy for them to spread their negativity and flood pages with their hate speeches and comments. Taking the case of Jhonny Depp and Amber Heard as an example, it can be seen on every platform how much this case has generated a lot of negativity and hate groups have been enjoying posting many memes and videos, etc.

You can check out my paper on how social media helped in creating terror and panic during the covid-19 pandemic in Mauritius below:

https://networkconference.netstudies.org/2022/csm/374/social-media-helped-in-creating-terror-and-panic-during-the-covid-19-pandemic-in-mauritius/

Hi Daniel, interesting paper. I have seen first-hand hate speech online, particularly under articles related to minorities. I have noticed recently that many news sites are now turning off or limiting commenting under online articles, which I believe is a great first step in preventing hate speech from spreading. Many people may argue (mostly the far right) that banning commenting is limiting freedom of speech, however many fail to realise that social networking sites already moderate, or shadowban users. If we use social media platforms freely, we must obey their rules as, after all, they are private enterprises.

If you’d like to check out my paper, here’s the link:

https://networkconference.netstudies.org/2022/csm/912/social-media-communities-as-support-networks-empowering-others-during-crisis-situations/

Hi Sienna,

Great pickup! I also noticed this type of moderation lately on some FaceBook groups that I follow. This is actually a popular form of moderating implemented by News pages where they have listed moderation times where a team is on hand to actively monitor content being posted to their articles (Vobič & Kovačič, 2014). This is a great additional step by companies to further assist with the moderation of their pages on social media platforms.

I’ll endeavor to check out your article too, I think you have chosen an interesting topic to research as I think it is very relatable to the current Ukraine crisis happening.

Daniel

Vobič, I., & Kovačič, M. P. (2014). KEEPING HATE SPEECH AT THE GATES: MODERATING PRACTICES AT THREE SLOVENIAN NEWS WEBSITES. 14.

Hello Daniel,

Thanks for posting your paper for us to read, it was a very interesting topic to learn about.

When thinking about hate groups and communities that spend their time spreading hate to others, one recent event comes to mind. Not so long ago a live streamer on Twitch spent their time whilst streaming directing their viewers to go and harass another streamer, something that is blatantly against the rules of the platform. Thankfully the streamer that was spreading hate was quickly banned for their actions. I think this is a good example of moderation done right, as opposed to some of the social media platforms that you mentioned. And while I do believe that moderation of social media is important, do you think that in some instances moderation can be taken too far? For example, moderators taking down posts that they just do not agree with, rather than the post being against the rules.

I would be interested to hear your opinion 😊

Sam

Hi Samuel,

Thanks for taking the time to read the article and comment! It 100% can be taken too far by both the human and computer aided side. Unfortunately algorithms that some social media platforms adopt have proven to inadvertently exhibit discriminatory bias in the past (Taylor, 2021). As for the human element there is also a lot of pressure on moderators to ensure that the moderation is both fair and effective in its delivery. We live in a time where freedom of expression is a paramount concern of both user and the platform so it’s key that platforms adopt tailored moderation methods that suit the platform and install fall-backs to prevent these possible overreactions or errors made by moderating teams. Harmony between moderation team and users is pivotal in helping to create an environment where users are able to input responses to assist the moderation team if they do misjudge a case (Vobič & Kovačič, 2014). I personally don’t relish the idea of ever being part of a moderation team and I think it’s best to understand that we as people can make mistakes, but we should continue to strive towards creating that safe and inclusive space for everyone. Give thanks to the moderation team!

Daniel

Taylor, T. (2021, November 21). Facebook knew its algorithms were biased against people of color. Mail Online. https://www.dailymail.co.uk/news/article-10227659/Facebook-knew-algorithms-biased-against-people-color.html

Vobič, I., & Kovačič, M. P. (2014). KEEPING HATE SPEECH AT THE GATES: MODERATING PRACTICES AT THREE SLOVENIAN NEWS WEBSITES. 14.

Hi Daniel,

Your article was extremely interesting to read as it sits pretty adjacent to my own which covers how ISIS has utilised Telegram’s platforms affordances to coordinate, collaborate and recruit new members – feel free to read it here: https://networkconference.netstudies.org/2022/csm/1103/isis-terrorising-telegram-how-the-isis-extremist-community-have-harnessed-the-applications-affordances-to-collaborate-coordinate-and-communicate/.

I’m interested to know whether you think there will be a time when social media sites will be completely free of hate groups and all those who use the platforms maliciously?

I’m also interested to know what you think the effect is on specific hate group communities when their posts and/or groups are removed from sites like Facebook or Telegram? Does it weaken the ties of such communities or do you think it bonds them even more closely together?

Kim

Hi Kim,

Thanks for sharing your post and I’ll definitely have to check it out! It really is quite interesting to see how these groups can utilise multiple platforms together to establish these networks of hate. Being optimistic I would like to believe that there would be a time in the future where these hate groups aren’t afforded these opportunities to coerce others into spreading malice and hate however realistically it is a gargantuan task that will require the attention and action of both corporations and governments around the world. There are countless social media platforms available to users so while Telegram is the current favourite by hate groups to establish these multi-platform connections there are dozens akin to it that are also seemingly unmoderated and readily available for these hate groups to establish their hubs on.

While hate groups do skirt a fine line between platform policies, they certainly must anticipate a shorter life cycle on the major social media platforms. If in this period they were successful in influencing any potential candidates they’ll be vetted and moved on to the less moderated platforms (Velásquez et al., 2021). This alternate platform is where the frustration of such removals may work to strengthen camaraderie and bonding of the hate group members, so if platforms are able to continually strengthen anti-hate speech policies and increase moderation on their platforms to prevent users from being recruited in the first place, we indeed might be able to weaken and strike a blow to the morale of such groups.

Daniel

Velásquez, N., Leahy, R., Johnson, R. N., Lupu, Y., Sear, R., Gabriel, N., Jha, O. K., Goldberg, B., & Johnson, N. F. (2021). Online hate network spreads malicious COVID-19 content outside the control of individual social media platforms. Scientific Reports (Nature Publisher Group), 11(1). http://dx.doi.org/10.1038/s41598-021-89467-y